Introduction

Quick commerce – or q-commerce – is revolutionizing the way consumers purchase groceries, household essentials, and ready-made food products. Zepto, Blinkit, Instamart, Getir, and Gorillas platforms have changed the compact delivery model to an ultra-fast kind, sometimes even within 10 to 20 minutes. These applications are becoming very popular and cover not only customer needs but also a comprehensive dataset relating to price, product availability, promotion, and consumer behavior.

The data of this kind is available for businesses, whether it be FMCG or retail brands or cloud kitchens, or market researchers. It delves into how categories are priced, what works from the promotion end, and what consumer preferences mean in different places-or even countries. The troublesome part is that all forms of data are dynamic, changing every hour. Tracking them on a manual scale is impossible. That’s where scraping into quick commerce APIs comes in.

By learning how to effectively scrape APIs from platforms like Zepto and Blinkit, businesses can build structured datasets that reflect real-time market intelligence. In this blog, we’ll explore what quick commerce APIs are, how to scrape them effectively, challenges to be aware of, best practices, and how Food Data Scraping is central to this entire process.

Understanding Quick Commerce APIs

An API (Application Programming Interface) is essentially a bridge that allows systems to communicate. For quick commerce platforms, APIs serve data related to:

- Product catalogs (names, SKUs, categories, brands).

- Prices (regular vs. discounted, dynamic pricing).

- Promotions (flash sales, bank offers, bundles).

- Inventory (in stock, low stock, out of stock).

- Delivery estimates (timelines and fees).

Platforms like Zepto or Blinkit may have provided official APIs for the developers to use, but for the most part, companies utilized custom-scraping APIs to get the information they need. These scraping APIs basically pull out the same structured data that powers most mobile apps and extract it into usable structured data.

Why Scraping Quick Commerce APIs Matters

Quick commerce is built on speed, and so is its data. Prices, stock levels, and offers change throughout the day depending on demand, time slots, or regional supply.

By scraping APIs effectively, businesses can:

- Benchmark Prices: Compare how products like rice or snacks are priced across Zepto and Blinkit in different cities.

- Track Promotions: Capture when discounts are launched, their frequency, and their impact.

- Analyze Demand Trends: Study which products go out of stock fastest.

- Plan Regional Strategies: Adapt supply chains and promotions to hyperlocal demand.

- Gain Competitive Intelligence: Monitor competitors’ strategies at scale.

Without automated scraping, these insights remain hidden and untapped.

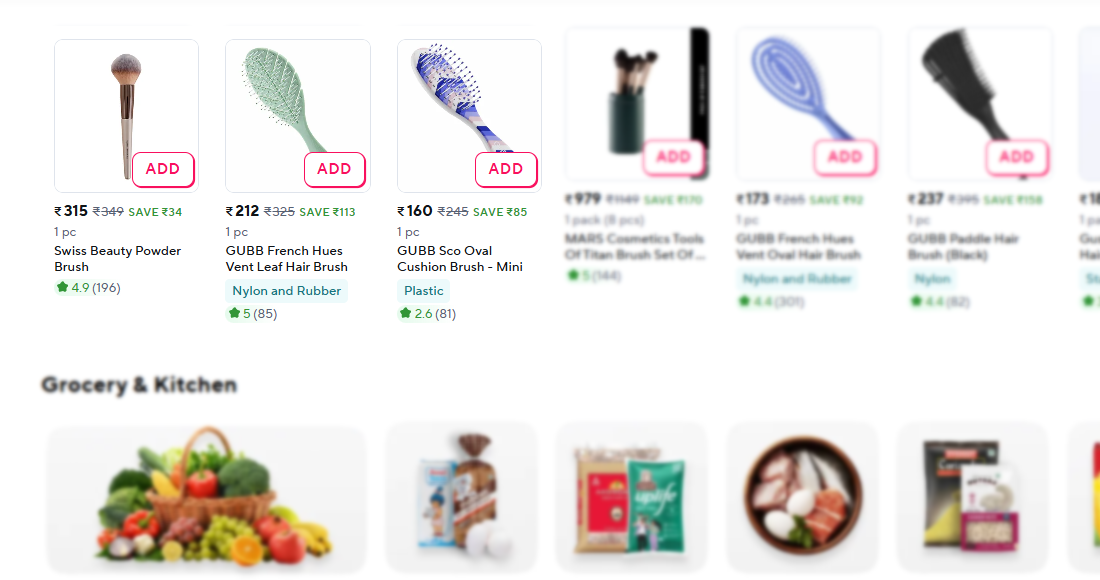

What Data Can Be Extracted from Zepto, Blinkit, and Others?

While each platform has its own data structure, scraping quick commerce APIs can provide access to:

Product Data

- Product names, categories, and SKUs.

- Pack sizes (500g, 1L, family packs).

- Brand details (local vs. international).

Pricing Data

- MRP vs. discounted price.

- Dynamic pricing (changes based on demand).

- Regional differences (same product priced differently in Delhi vs. Bangalore).

Inventory Data

- Availability status (in stock, out of stock).

- Regional stock variations.

- Seasonal availability.

Promotional Data

- Flash sales, bundle offers, cashback campaigns.

- Wallet-linked discounts (Paytm, PhonePe, UPI).

- Festival promotions (Diwali, Eid, Christmas).

Delivery Insights

- Minimum order values.

- Delivery charges per PIN code.

- Average delivery time estimates.

By combining this into a dataset, businesses create a live snapshot of the q-commerce ecosystem.

How to Scrape Quick Commerce APIs Effectively

For successful web scraping, one needs a lot of technical accuracy, strategic planning, and knowledge of how one should work within the laws surrounding it.

Step 1: Identify Your Goals

Decide whether your focus is on product-level analysis, category benchmarking, or full platform monitoring. For instance, a beverage company might only monitor the “Beverages” category, while a market research firm may scrape all categories.

Step 2: Locate API Endpoints

Most quick commerce apps rely on APIs to power their mobile interfaces. By inspecting network requests while using the app, you can identify endpoints for product listings, pricing, or promotions.

Step 3: Authentication and Headers

Some APIs require tokens or keys for access. Custom scrapers simulate app requests by using proper headers, cookies, and authentication tokens.

Step 4: Build the Scraper

Use Python libraries like Requests, Scrapy, or Selenium to extract JSON responses from APIs.

Example (pseudo-code):

import requests

url = “https://api.zepto.com/v1/products?category=snacks&location=Mumbai”

headers = {“Authorization”: “Bearer YOUR_API_KEY”}

response = requests.get(url, headers=headers)

data = response.json()

for product in data[‘products’]:

print(product[‘name’], product[‘price’], product[‘availability’])

Step 5: Normalize Data

- Convert units into consistent formats (e.g., kg, g, L).

- Standardize currency for cross-country comparisons.

- Align product categories across platforms.

Step 6: Store Data

Save datasets in SQL, MongoDB, or cloud warehouses. For large-scale scraping, use big data infrastructure like AWS S3 or Google BigQuery.

Step 7: Analyze and Visualize

Feed the cleaned data into BI dashboards (Power BI, Tableau) or predictive models for actionable insights.

Challenges in Scraping Quick Commerce APIs

Scraping at scale is not without hurdles.

- Anti-Scraping Defenses: Four types of scraping are used to block CAPTCHA’s endation, bot detection, and IP blocking.

- Dynamic changes: Changes in rates and inventory take place several times in a day.

- Regional complex usage: Large-scale spread requiring coverage on a thinner Pin code-bound Basis

- Laws and Social Concerns: Data scrapes need to adhere to the terms of service and also data protection laws.

- Normalization Reasons: For example, different name conventions, as “Potato Chips 100g” and “chips-100g pack” by vendors, need to be cleaned.

These challenges highlight the need for robust scraping strategies and ethical practices.

Best Practices for Effective Quick Commerce API Scraping

To maximize efficiency and insights, businesses should adopt these practices:

- Set Clear Objectives – Know exactly what you want to measure (pricing, promotions, inventory).

- Use Rotating Proxies – Avoid IP bans by rotating addresses.

- Schedule Automation – Update datasets frequently to reflect real-time changes.

- Normalize Consistently – Standardize product naming and units across platforms.

- Leverage AI for Forecasting – Combine scraped data with machine learning for demand predictions.

- Ensure Compliance – Always operate within legal and ethical frameworks.

- Partner with Experts – Collaborate with data providers for scalability and accuracy.

Use Cases of Scraping Quick Commerce APIs

FMCG Brands

A packaged snack manufacturer can scrape Blinkit and Zepto APIs to track product placement, pricing, and competitor offers in different cities.

Retailers

Supermarkets can benchmark their in-store pricing with Q-commerce platforms and adjust promotions.

Cloud Kitchens & Restaurants

Kitchens can monitor ingredient costs (oil, rice, and vegetables) in real time and adjust menu pricing.

Market Analysts

Researchers can track global trends, such as rising demand for organic or plant-based foods.

Investors

Venture capital firms can evaluate platform growth by analyzing SKU diversity, pricing strategies, and regional adoption.

Global Relevance of Quick Commerce API Scraping

- India: Zepto, Blinkit, and Instamart dominate, focusing on price-sensitive markets.

- Europe: Getir and Gorillas emphasize premium and organic products.

- Middle East: Talabat Mart leverages regional shopping patterns like Ramadan surges.

- Latin America: Rappi integrates groceries with food, pharmacy, and lifestyle products.

Creating a global quick-commerce dataset through scraping APIs worldwide is, of course, the best way for multinational brands to benchmark themselves from one border to the other.

The Future of Quick Commerce Data Scraping

By 2025 and beyond, quick commerce API scraping will evolve with AI and predictive analytics. Businesses won’t just track changes—they’ll anticipate trends before they occur.

- Predictive Promotions: AI will forecast when competitors will launch discounts.

- Dynamic Price Adjustments: Automated systems will adjust prices in real time based on competitor data.

- Personalized Recommendations: Scraping will feed recommendation engines for hyper-personalized marketing.

- Supply Chain Synchronization: Procurement will align with live demand signals.

The future is not just about scraping data but about transforming it into proactive strategies.

Conclusion: The Role of Food Data Scraping

Quick commerce companies like Zepto, Blinkit, and Instamart are reaffirming the retail space. The real boon? For businesses, it is the data that many platforms become the owners of trends in prices, promotions weirdly for a consumer, and likes. Scrape the Quick Commerce APIs to get the latest information.

By doing so, businesses can:

- Benchmark competitors with precision.

- Forecast demand and adapt supply chains.

- Design smarter, more effective promotions.

- Expand strategically into new regions.

At the foundation of this entire process is Food Data Scraping—the technology that transforms raw, unstructured data from quick commerce platforms into structured insights. For FMCG brands, restaurants, analysts, and investors, food data scraping is not just a technical tool—it is the engine of growth, innovation, and global competitiveness in the quick commerce economy.